These devices which pump blood out of the body, oxygenate it, and then pump it back into the body are normally quite large and cumbersome. Our client is creating a version that can be carried in one hand.

A collection of standard icons that we were required to draw from in order to meet regulatory requirements.

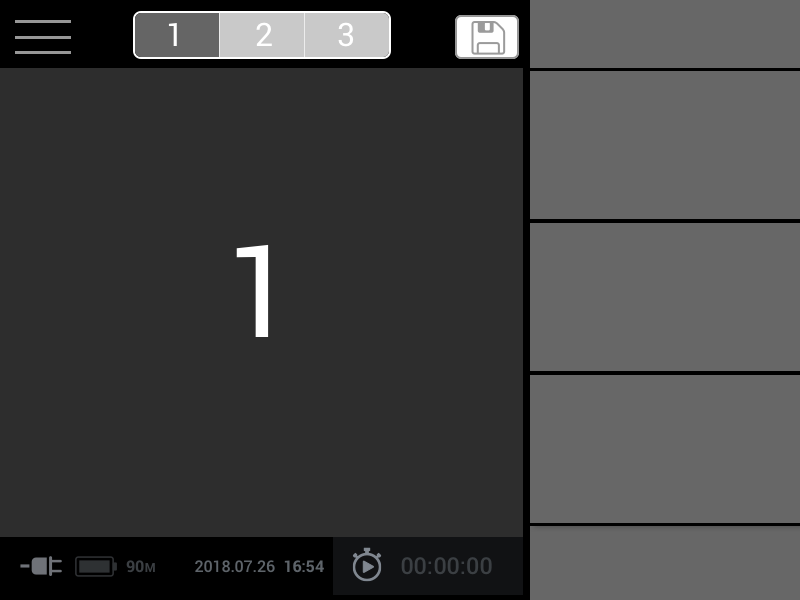

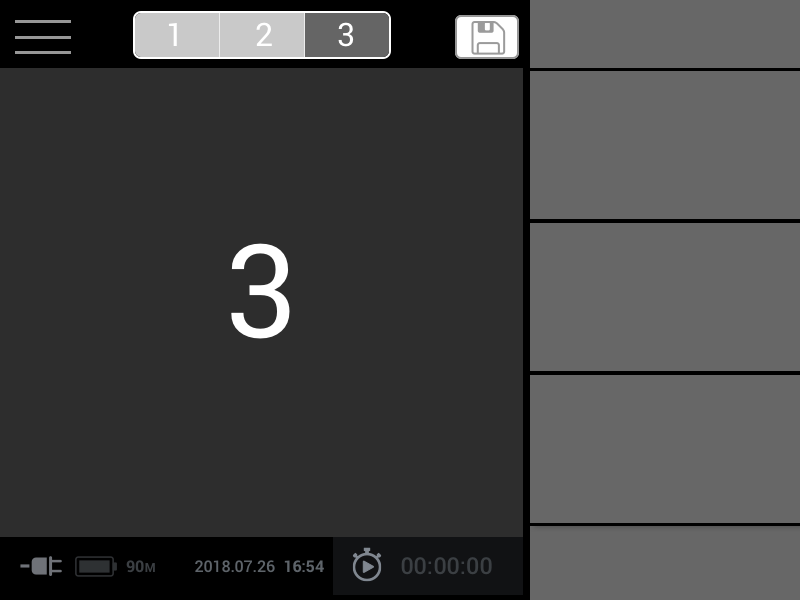

first tab active

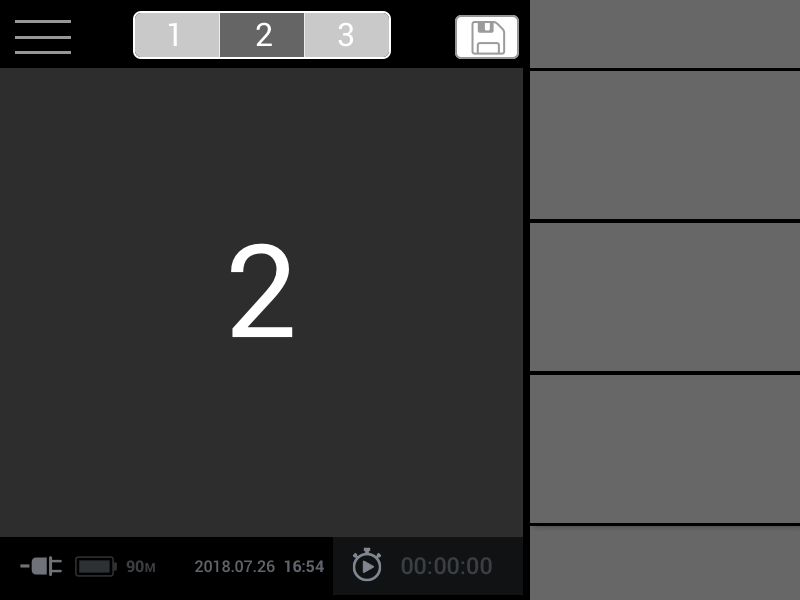

middle tab active

last tab active

Persistent footer

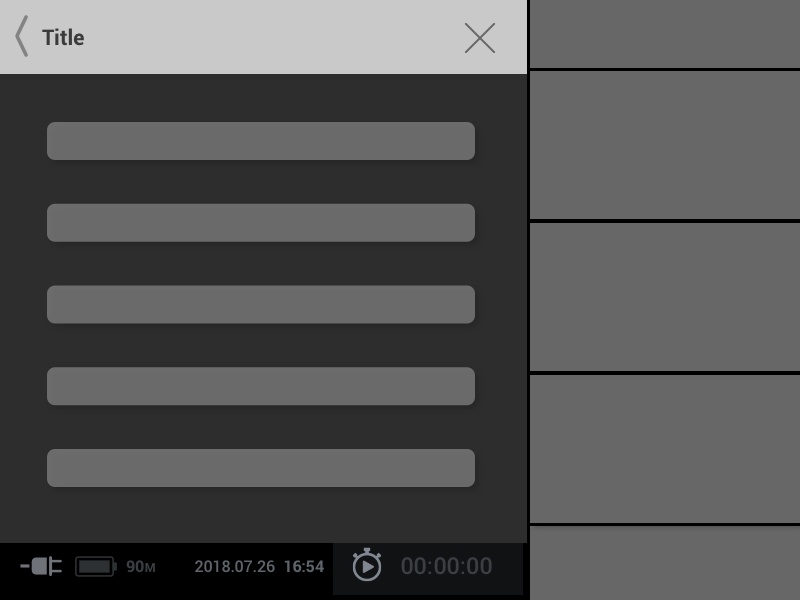

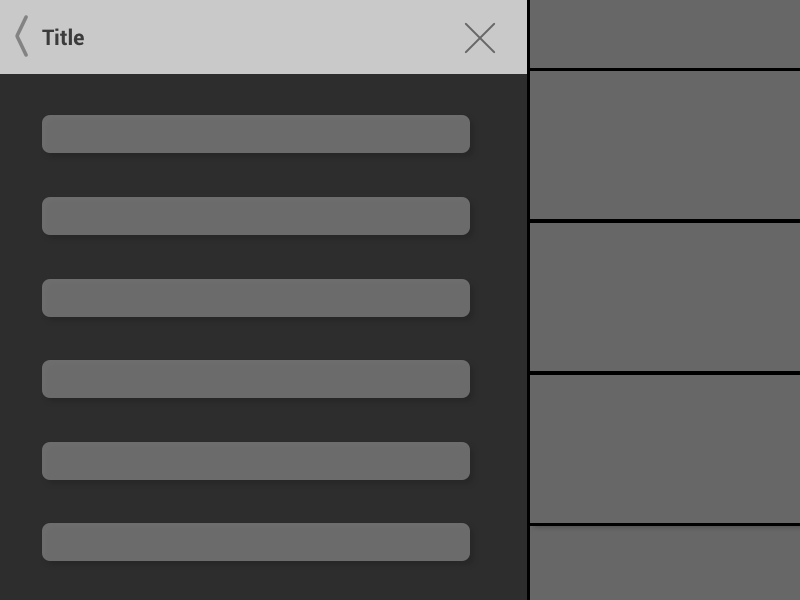

Full length modal

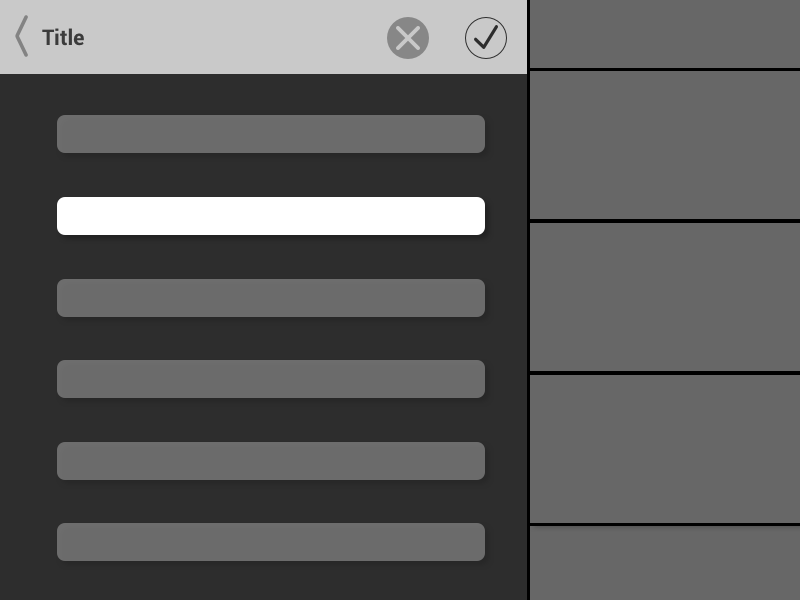

changes made...

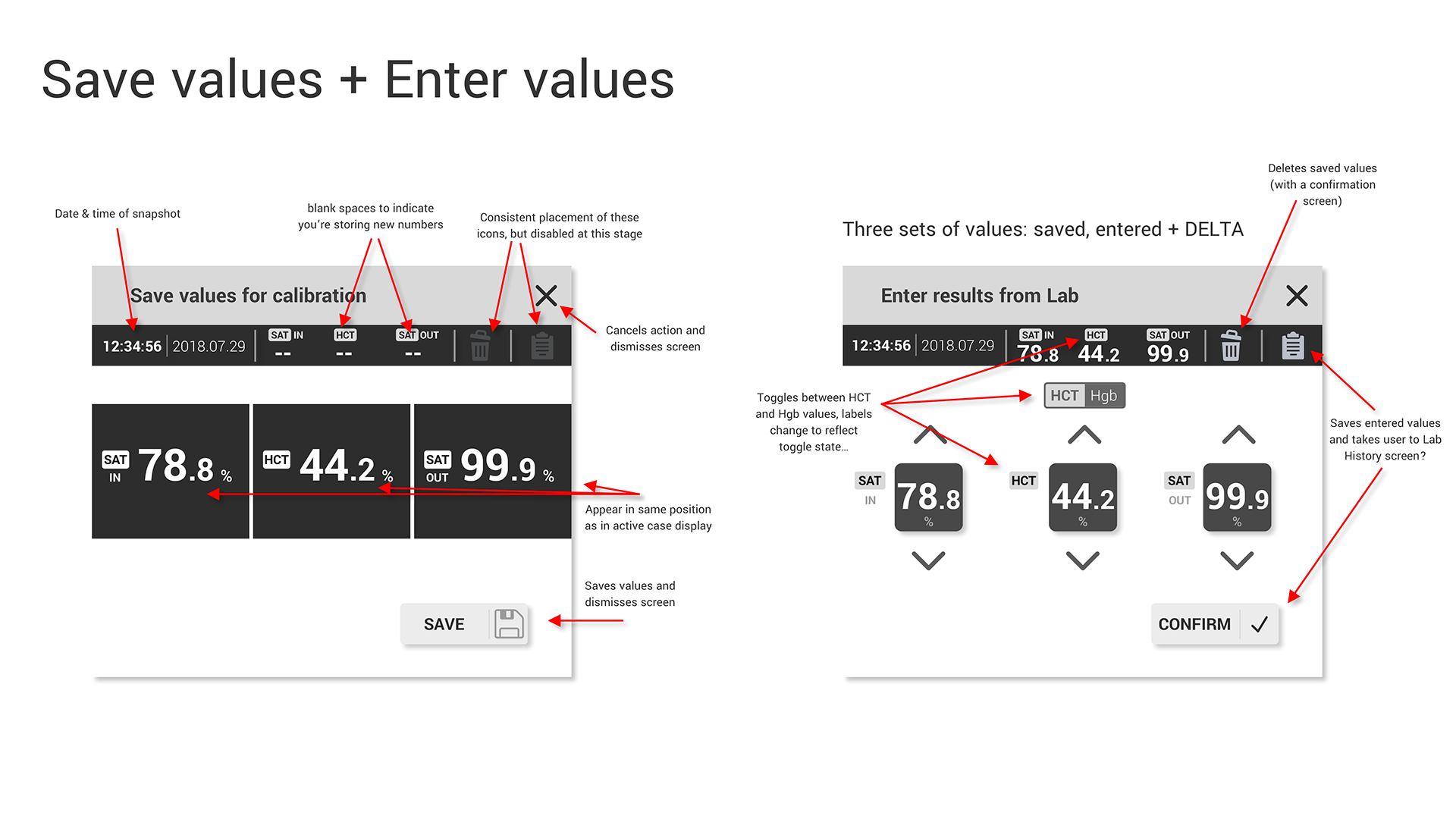

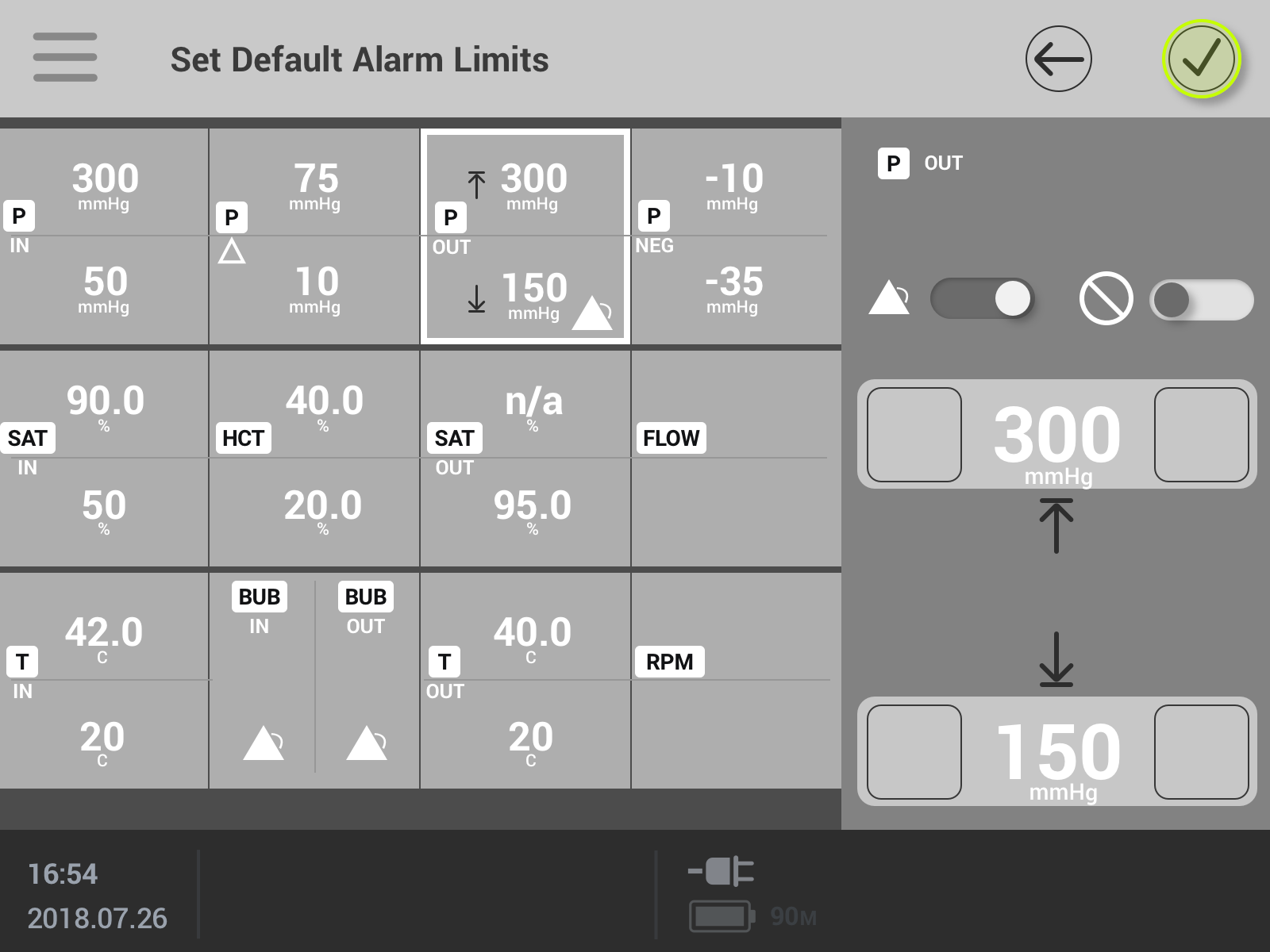

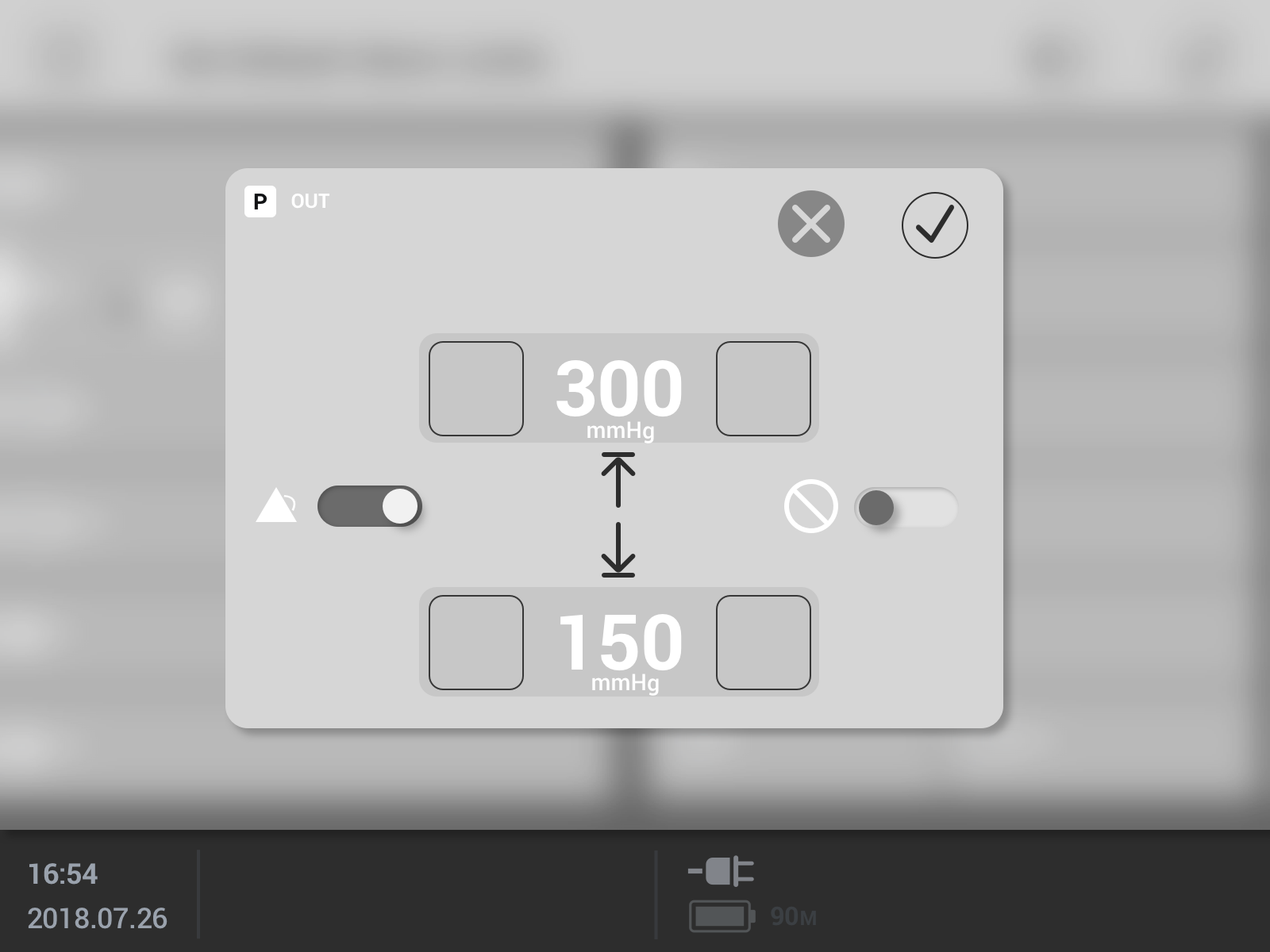

Annotated wireframes defining SO2 workflow

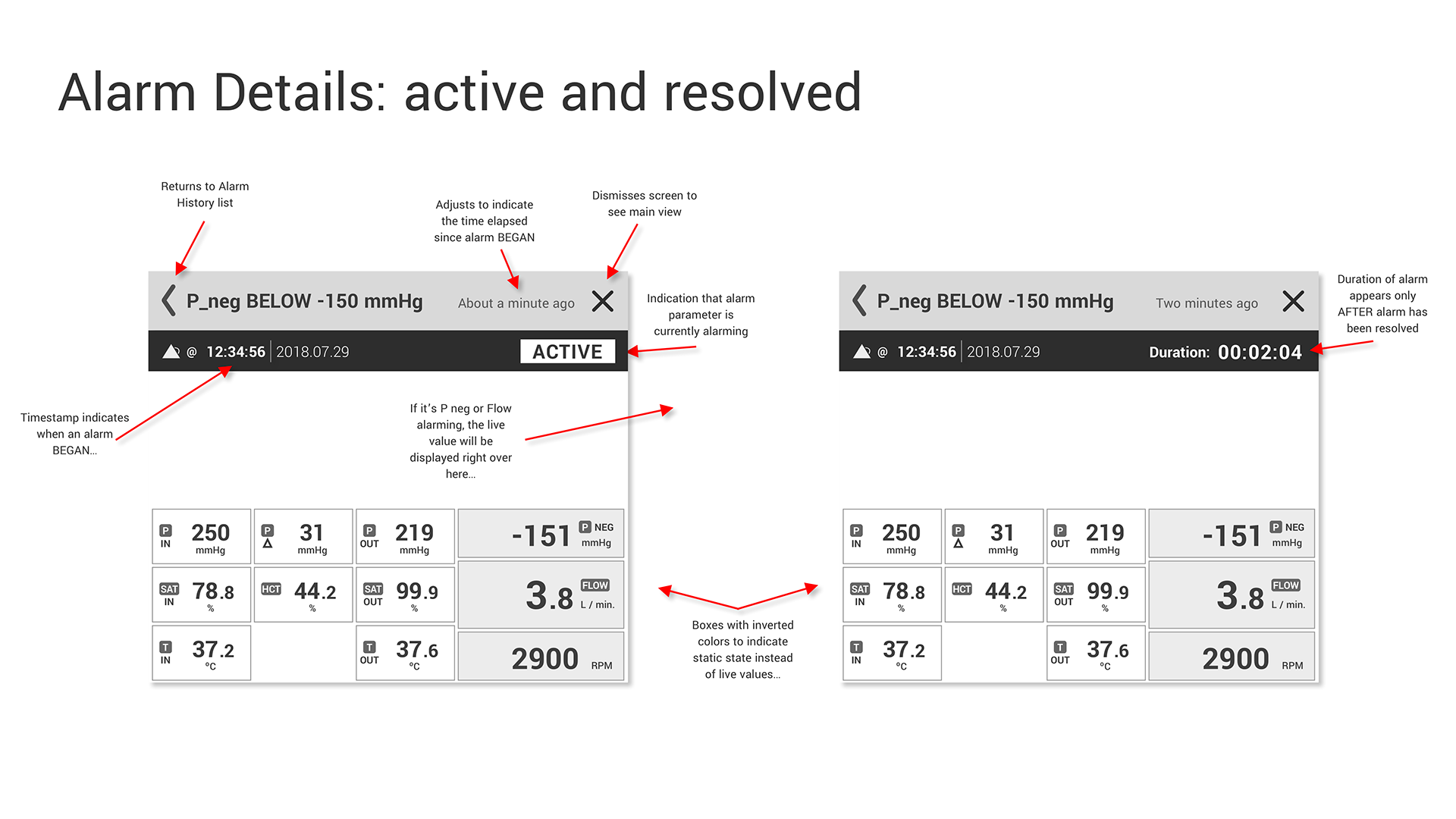

Annotated wireframes highlighting state changes

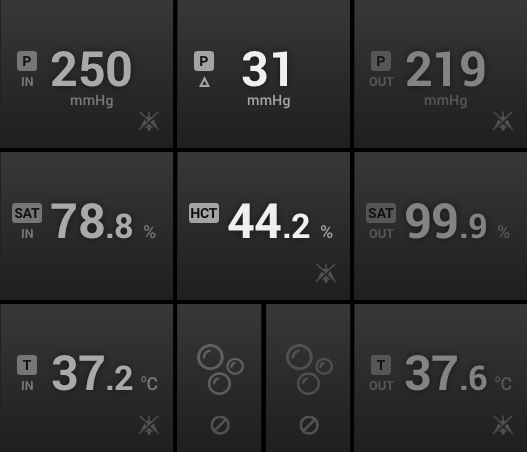

home screen

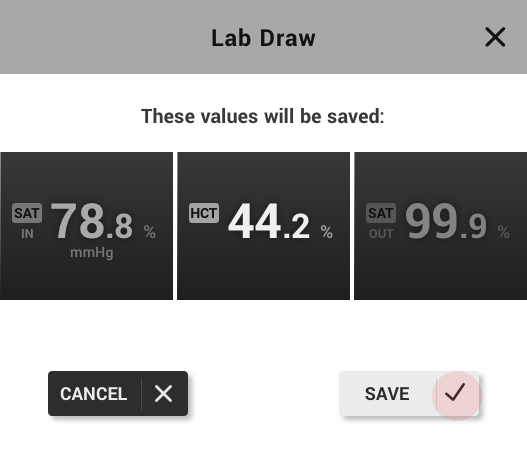

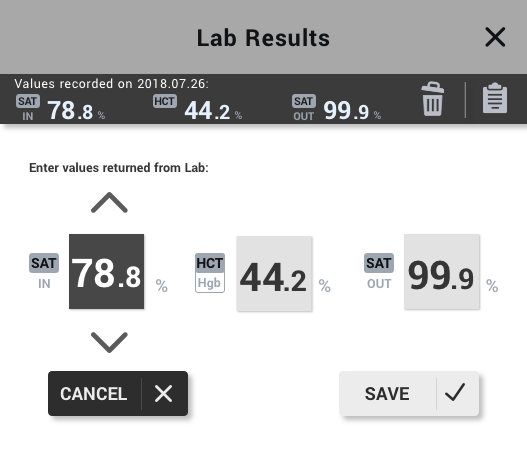

capturing values

editing captured values

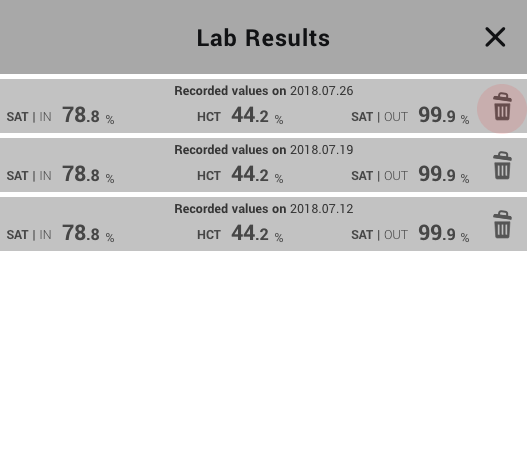

viewing previous saved values

option A

option B

option C

Animated GIF made possible by Principle + Sketch.

This storyboard version of the previous animated interaction was an easier representation to leave on screen and to refer to during discussions with our client.

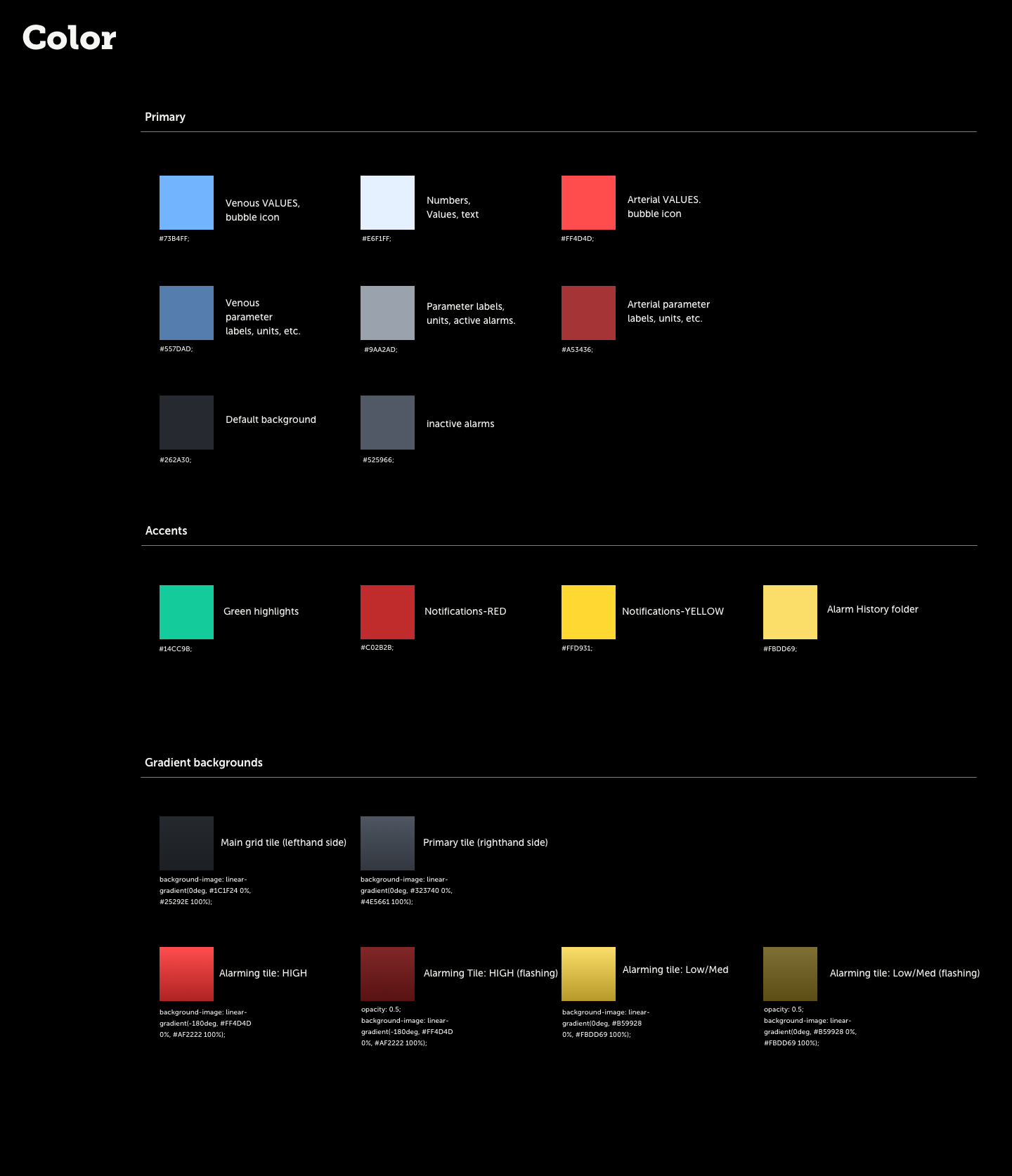

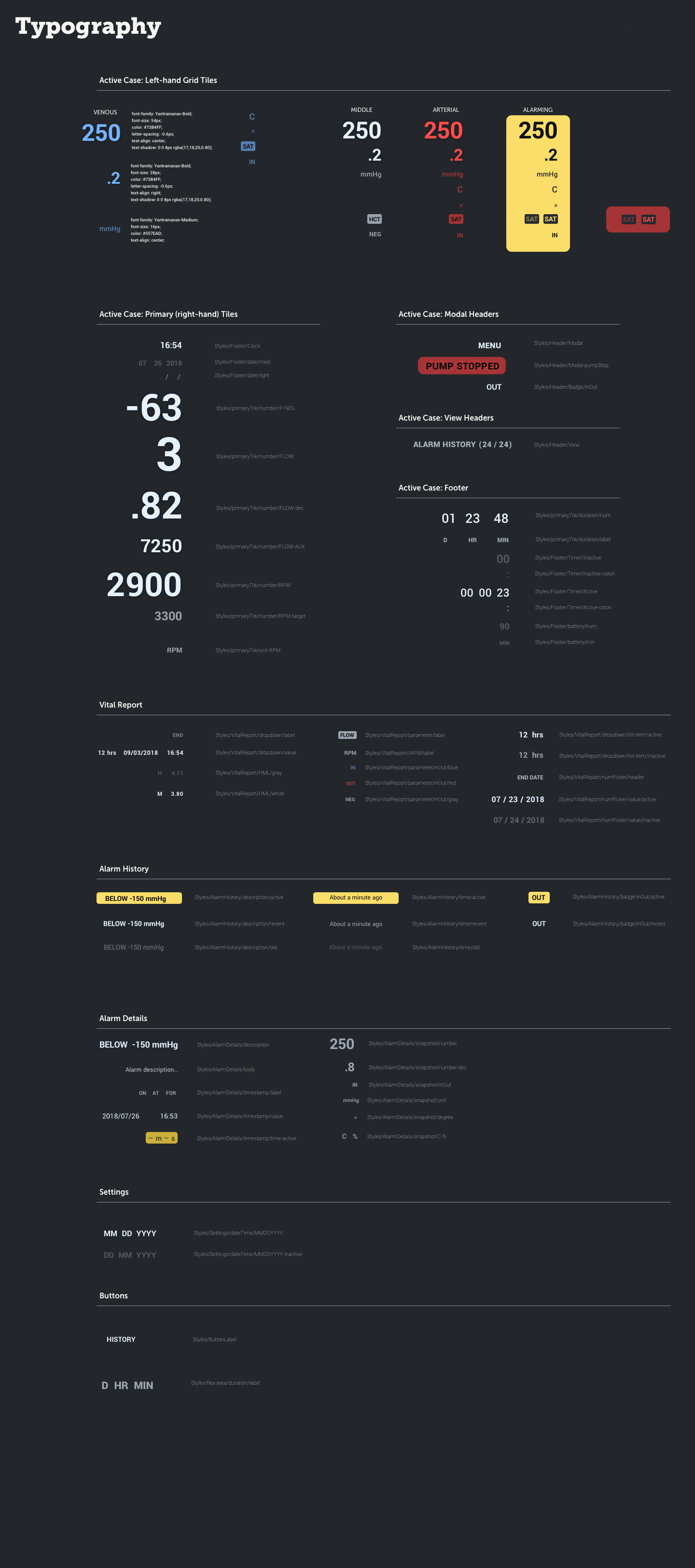

Designing the interaction vernacular for this particular medical device required clear indicators surrounding navigation elements: what are the CTAs for each state of a screen and what happens to communicate the system's status?

We loaded our interactive prototype onto a tablet using Invision. This allowed our client to test our prototype on clinicians (potential users) very quickly and easily at trade shows and exhibitions.